Face Generation

In this project, we will define and train a DCGAN on a dataset of faces. Our goal is to get a generator network to generate new images of faces that look as realistic as possible! The project will be broken down into a series of tasks from loading in data to defining and training adversarial networks. At the end of the notebook, we will be able to visualize the results of our trained Generator to see how it performs; our generated samples should look like fairly realistic faces with small amounts of noise.

Get the Data

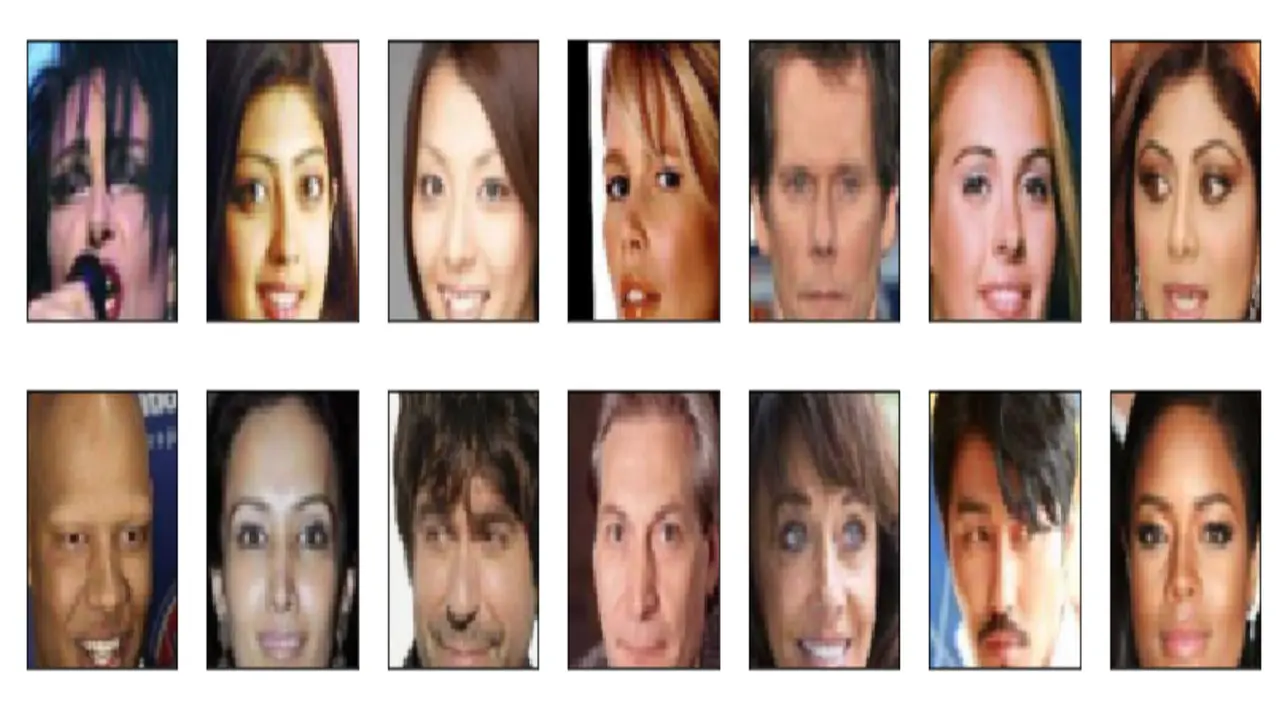

We will use CelebFaces Attributes Dataset (CelebA) to train your adversarial networks.

Pre-processed Data

Since the project’s main focus is on building the GANs, we’ve done some of the pre-processing for you. Each of the CelebA images has been cropped to remove parts of the image that don’t include a face, then resized down to 64x64x3 NumPy images.

Visualize the CelebA Data

The CelebA dataset contains over 200,000 celebrity images with annotations. Since we are going to be generating faces, we won’t need the annotations, we will only need the images. Note that these are color images with 3 color channels (RGB) each.

Load data

def get_dataloader(batch_size, image_size, data_dir='processed_celeba_small/'):

"""

Batch the neural network data using DataLoader

:param batch_size: The size of each batch; the number of images in a batch

:param img_size: The square size of the image data (x, y)

:param data_dir: Directory where image data is located

:return: DataLoader with batched data

"""

transform = transforms.Compose([ transforms.Resize(image_size),

transforms.ToTensor()])

train_path = os.path.join(data_dir, "celeba/")

my_dataset = datasets.ImageFolder(train_path, transform = transform)

# TODO: Implement function and return a dataloader

data_loader = torch.utils.data.DataLoader(dataset = my_dataset,

batch_size = batch_size,

shuffle = True, num_workers = 4)

return data_loader

Create a DataLoader

# Call your function and get a dataloader

celeba_train_loader = get_dataloader(batch_size, img_size)

Next, we can view some images! we should seen square images of somewhat-centered faces.

# obtain one batch of training images

dataiter = iter(celeba_train_loader)

images, _ = dataiter.next() # _ for no labels

# plot the images in the batch, along with the corresponding labels

fig = plt.figure(figsize=(20, 4))

plot_size=20

for idx in np.arange(plot_size):

ax = fig.add_subplot(2, plot_size/2, idx+1, xticks=[], yticks=[])

imshow(images[idx])

Pre-process your image data and scale it to a pixel range of -1 to 1

We need to do a bit of pre-processing; we know that the output of a tanh activated generator will contain pixel values in a range from -1 to 1, and so, we need to rescale our training images to a range of -1 to 1. (Right now, they are in a range from 0-1.)

def scale(x, feature_range=(-1, 1)):

''' Scale takes in an image x and returns that image, scaled

with a feature_range of pixel values from -1 to 1.

This function assumes that the input x is already scaled from 0-1.'''

# assume x is scaled to (0, 1)

# scale to feature_range and return scaled x

min, max = feature_range

x = x * (max - min) + min

return x

# check scaled range

# should be close to -1 to 1

img = images[0]

scaled_img = scale(img)

print('Min: ', scaled_img.min())

print('Max: ', scaled_img.max())

Min: tensor(-0.9294)

Max: tensor(1.)

Define the Model

A GAN is comprised of two adversarial networks, a discriminator and a generator.

Discriminator

Our first task will be to define the discriminator. This is a convolutional classifier without any maxpooling layers. To deal with this complex data, it’s suggested we use a deep network with normalization.

tests.test_discriminator(Discriminator)

Tests Passed

Generator

The generator should upsample an input and generate a new image of the same size as our training data 32x32x3. This should be mostly transpose convolutional layers with normalization applied to the outputs.

tests.test_generator(Generator)

Tests Passed

Initialize the weights of your networks

To help our models converge, we should initialize the weights of the convolutional and linear layers in our model. From reading the original DCGAN paper, they say:

All weights were initialized from a zero-centered Normal distribution with standard deviation 0.02.

So, our next task will be to define a weight initialization function that does just this!

def weights_init_normal(m):

"""

Applies initial weights to certain layers in a model .

The weights are taken from a normal distribution

with mean = 0, std dev = 0.02.

:param m: A module or layer in a network

"""

# classname will be something like:

# `Conv`, `BatchNorm2d`, `Linear`, etc.

classname = m.__class__.__name__

# Apply initial weights to convolutional and linear layers

if classname.find('Linear') != -1 or classname.find('Convo2d') != -1:

# get the number of the inputs

n = m.in_features

y = (1.0/np.sqrt(n))

m.weight.data.normal_(0, y)

m.bias.data.fill_(0)

Build complete network

Define our models’ hyperparameters and instantiate the discriminator and generator.

def build_network(d_conv_dim, g_conv_dim, z_size):

# define discriminator and generator

D = Discriminator(d_conv_dim)

G = Generator(z_size=z_size, conv_dim=g_conv_dim)

# initialize model weights

D.apply(weights_init_normal)

G.apply(weights_init_normal)

print(D)

print()

print(G)

return D, G

# Define model hyperparams

d_conv_dim = 32

g_conv_dim = 32

z_size = 100

D, G = build_network(d_conv_dim, g_conv_dim, z_size)

Discriminator(

(conv1): Sequential(

(0): Conv2d(3, 32, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

)

(conv2): Sequential(

(0): Conv2d(32, 64, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(conv3): Sequential(

(0): Conv2d(64, 128, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(fc): Linear(in_features=2048, out_features=1, bias=True)

)

Generator(

(fc): Linear(in_features=100, out_features=2048, bias=True)

(t_conv1): Sequential(

(0): ConvTranspose2d(128, 64, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(t_conv2): Sequential(

(0): ConvTranspose2d(64, 32, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(t_conv3): Sequential(

(0): ConvTranspose2d(32, 3, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

)

)

Training on GPU

Check if we can train on GPU. Here, we’ll set this as a boolean variable train_on_gpu.

# Check for a GPU

train_on_gpu = torch.cuda.is_available()

if not train_on_gpu:

print('No GPU found. Please use a GPU to train your neural network.')

else:

print('Training on GPU!')

Training on GPU!

Discriminator and Generator Losses

Now we need to calculate the losses for both types of adversarial networks.

Discriminator Losses

- For the discriminator, the total loss is the sum of the losses for real and fake images,

d_loss = d_real_loss + d_fake_loss.- Remember that we want the discriminator to output 1 for real images and 0 for fake images, so we need to set up the losses to reflect that.

Generator Loss

The generator loss will look similar only with flipped labels. The generator’s goal is to get the discriminator to think its generated images are real.

def real_loss(D_out):

'''Calculates how close discriminator outputs are to being real.

param, D_out: discriminator logits

return: real loss'''

batch_size = D_out.size(0)

labels = torch.ones(batch_size)

if train_on_gpu:

labels = labels.cuda()

criterion = nn.BCEWithLogitsLoss()

loss = criterion(D_out.squeeze(), labels)

return loss

def fake_loss(D_out):

'''Calculates how close discriminator outputs are to being fake.

param, D_out: discriminator logits

return: fake loss'''

batch_size = D_out.size(0)

labels = torch.zeros(batch_size)

if train_on_gpu:

labels = labels.cuda()

criterion = nn.BCEWithLogitsLoss()

loss = criterion(D_out.squeeze(), labels)

return loss

Optimizers

Define optimizers for our Discriminator (D) and Generator (G)

# params

lr = 0.0004

beta1=0.5

beta2=0.999 # default value

# Create optimizers for the discriminator D and generator G

d_optimizer = optim.Adam(D.parameters(), lr, [beta1, beta2])

g_optimizer = optim.Adam(G.parameters(), lr, [beta1, beta2])

Training

Training will involve alternating between training the discriminator and the generator. We will use our functions real_loss and fake_loss to help us calculate the discriminator losses.

- We should train the discriminator by alternating on real and fake images

- Then the generator, which tries to trick the discriminator and should have an opposing loss function

Saving Samples

def train(D, G, n_epochs, print_every=50):

'''Trains adversarial networks for some number of epochs

param, D: the discriminator network

param, G: the generator network

param, n_epochs: number of epochs to train for

param, print_every: when to print and record the models' losses

return: D and G losses'''

# move models to GPU

if train_on_gpu:

D.cuda()

G.cuda()

# keep track of loss and generated, "fake" samples

samples = []

losses = []

# Get some fixed data for sampling. These are images that are held

# constant throughout training, and allow us to inspect the model's performance

sample_size = 16

fixed_z = np.random.uniform(-1, 1, size=(sample_size, z_size))

fixed_z = torch.from_numpy(fixed_z).float()

# move z to GPU if available

if train_on_gpu:

fixed_z = fixed_z.cuda()

# epoch training loop

for epoch in range(n_epochs):

# batch training loop

for batch_i, (real_images, _) in enumerate(celeba_train_loader):

batch_size = real_images.size(0)

real_images = scale(real_images)

# 1. Train the discriminator on real and fake images

d_optimizer.zero_grad()

# Compute the discriminator losses on real images

if train_on_gpu:

real_images = real_images.cuda()

D_real = D(real_images)

d_real_loss = real_loss(D_real)

# Generate fake images

z = np.random.uniform(-1, 1, size=(batch_size, z_size))

z = torch.from_numpy(z).float()

# move x to GPU, if available

if train_on_gpu:

z = z.cuda()

fake_images = G(z)

D_fake = D(fake_images)

d_fake_loss = fake_loss(D_fake)

# add up loss and perform backprop

d_loss = d_real_loss + d_fake_loss

d_loss.backward()

d_optimizer.step()

# 2. Train the generator with an adversarial loss

g_optimizer.zero_grad()

# Generate fake images

z = np.random.uniform(-1, 1, size=(batch_size, z_size))

z = torch.from_numpy(z).float()

if train_on_gpu:

z = z.cuda()

fake_images = G(z)

# Compute the discriminator losses on fake images

# using flipped labels!

D_fake = D(fake_images)

g_loss = real_loss(D_fake) # use real loss to flip labels

# perform backprop

g_loss.backward()

g_optimizer.step()

# Print some loss stats

if batch_i % print_every == 0:

# append discriminator loss and generator loss

losses.append((d_loss.item(), g_loss.item()))

# print discriminator and generator loss

print('Epoch [{:5d}/{:5d}] | d_loss: {:6.4f} | g_loss: {:6.4f}'.format(

epoch+1, n_epochs, d_loss.item(), g_loss.item()))

## AFTER EACH EPOCH##

# this code assumes your generator is named G, feel free to change the name

# generate and save sample, fake images

G.eval() # for generating samples

samples_z = G(fixed_z)

samples.append(samples_z)

G.train() # back to training mode

# Save training generator samples

with open('train_samples.pkl', 'wb') as f:

pkl.dump(samples, f)

# finally return losses

return losses

# call training function

losses = train(D, G, n_epochs=50)

Epoch [ 1/ 50] | d_loss: 1.4756 | g_loss: 0.7581

Epoch [ 1/ 50] | d_loss: 0.6835 | g_loss: 4.3863

Epoch [ 1/ 50] | d_loss: 0.5454 | g_loss: 2.2342

Epoch [ 1/ 50] | d_loss: 0.7611 | g_loss: 1.7250

Epoch [ 1/ 50] | d_loss: 0.8612 | g_loss: 1.9986

Epoch [ 1/ 50] | d_loss: 1.0474 | g_loss: 1.2333

Epoch [ 1/ 50] | d_loss: 1.0420 | g_loss: 1.4665

Epoch [ 1/ 50] | d_loss: 1.0175 | g_loss: 1.2827

Epoch [ 1/ 50] | d_loss: 1.0866 | g_loss: 1.4246

Epoch [ 2/ 50] | d_loss: 1.1408 | g_loss: 1.3269

Epoch [ 2/ 50] | d_loss: 1.1453 | g_loss: 1.3151

Epoch [ 2/ 50] | d_loss: 1.1817 | g_loss: 1.5866

Epoch [ 2/ 50] | d_loss: 1.1339 | g_loss: 0.9222

Epoch [ 2/ 50] | d_loss: 0.8745 | g_loss: 1.8895

.........

Epoch [ 24/ 50] | d_loss: 3.0976 | g_loss: 6.6547

Epoch [ 24/ 50] | d_loss: 0.7348 | g_loss: 1.2033

Epoch [ 24/ 50] | d_loss: 0.6164 | g_loss: 2.5696

Epoch [ 24/ 50] | d_loss: 0.6439 | g_loss: 1.2263

Epoch [ 24/ 50] | d_loss: 0.6570 | g_loss: 2.5571

Epoch [ 25/ 50] | d_loss: 0.6189 | g_loss: 1.2975

Epoch [ 25/ 50] | d_loss: 0.5116 | g_loss: 1.7852

Epoch [ 25/ 50] | d_loss: 0.3487 | g_loss: 2.4798

Epoch [ 25/ 50] | d_loss: 0.7311 | g_loss: 1.1017

Epoch [ 50/ 50] | d_loss: 0.3821 | g_loss: 2.6701

Epoch [ 50/ 50] | d_loss: 0.4035 | g_loss: 2.1759

Epoch [ 50/ 50] | d_loss: 0.4827 | g_loss: 2.8167

Epoch [ 50/ 50] | d_loss: 0.2443 | g_loss: 2.5251

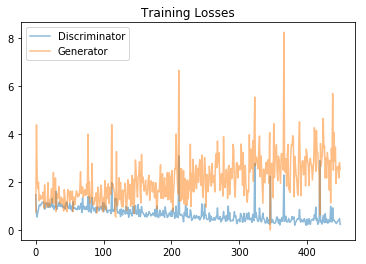

Training loss

Plot the training losses for the generator and discriminator, recorded after each epoch.

fig, ax = plt.subplots()

losses = np.array(losses)

plt.plot(losses.T[0], label='Discriminator', alpha=0.5)

plt.plot(losses.T[1], label='Generator', alpha=0.5)

plt.title("Training Losses")

plt.legend()

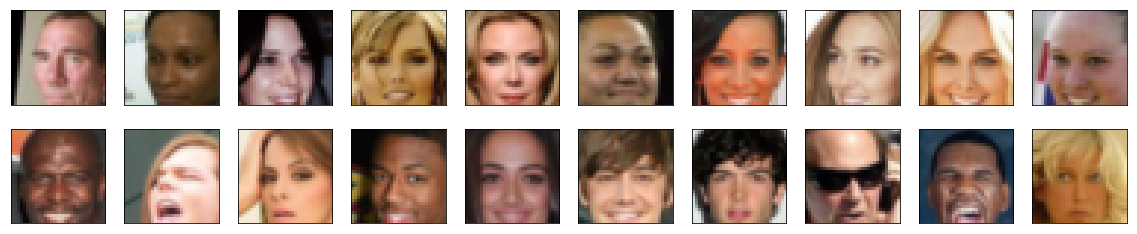

Generator samples from training

View samples of images from the generator.

_ = view_samples(-1, samples)

The generated faces appear that it is made of celebrity faces that are mostly white. To imrpvove on that, we need to add more images in the dataset so that it has almost equal number of white and non-white celebrity faces. Also we can make the background same color in all these images.

Model size is good as output face picture size is small 32x32, unfortunately, most of face picture don’t have its chin, so I couldn’t see how chin impact on whole face.

ADAM optimizer is the preferred optimizer for DCGAN model. We can also try some other optimizer for testing purpose.